Replacing coders with AI? Why Bill Gates, Sam Altman and experience say you shouldn’t.

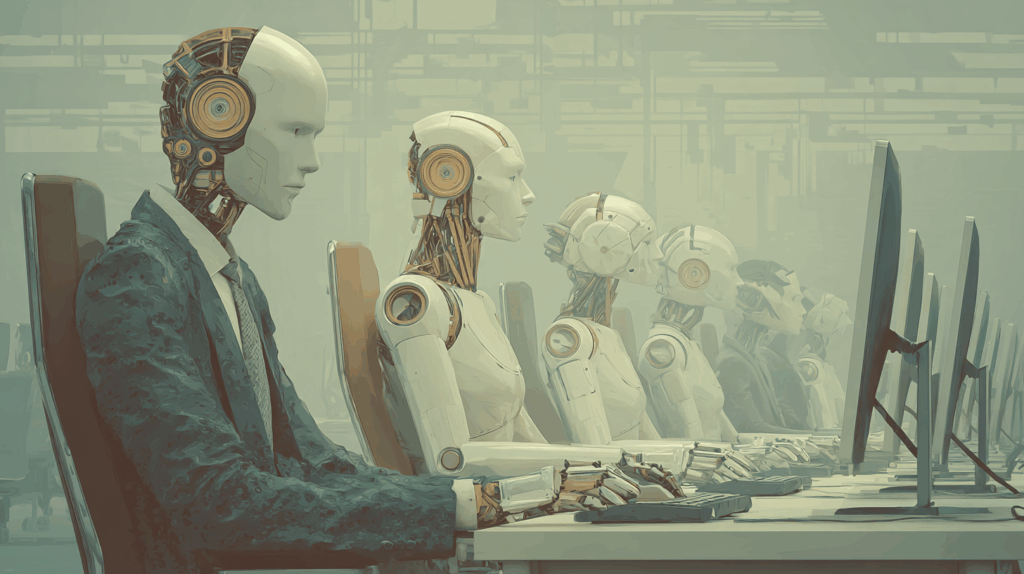

In the race to automate everything – from customer service to code – AI is being heralded as a silver bullet. The narrative is seductive: AI tools that can write entire applications, streamline engineering teams and reduce the need for expensive human developers, along with hundreds of other jobs. But from my point of view …

Replacing coders with AI? Why Bill Gates, Sam Altman and experience say you shouldn’t. Read More »